How to Use a Load Balancer as an API Gateway

One of the biggest trends in application delivery is the increased importance of integration capabilities. Web applications are not only consumed by end users, but also make up functionality consumed by other applications or services.

The API economy, a set of models enabling the integration of systems, data, and applications, is a key component of application delivery. If you think of the applications you use today, it’s hard to find examples that do not have some integration features critical to their operation. This could be integrations with social media accounts, authentication services, or external data sources.

In fact, many business operations are completely reliant on these integrations, and APIs are viewed as major business differentiators today. A critical requirement for the successful delivery of business-critical APIs is that these are available all the time, published securely, easy to manage, and provide observability for successful operation. It is becoming more common for an API gateway to form part of the application infrastructure.

What is an API Gateway?

An API is an acronym for Application Performance Interface, which is an interface allowing two applications to talk to one another. APIs are typically delivered over HTTP(s). An API gateway is a management tool that takes all API calls, then routes them to the appropriate result.

In the early days of developing applications that utilize APIs for communication, there was a clear need for API management — a way to perform generic functions like authentication, throttling, and SSL encryption,/decryption separate to application business logic. Developers started to utilize an API gateway publishing access to APIs via a single edge endpoint, which could perform these functions and also route requests to correct endpoints with minimal delay.

Load Balancers and Application Delivery Controllers (ADCs)

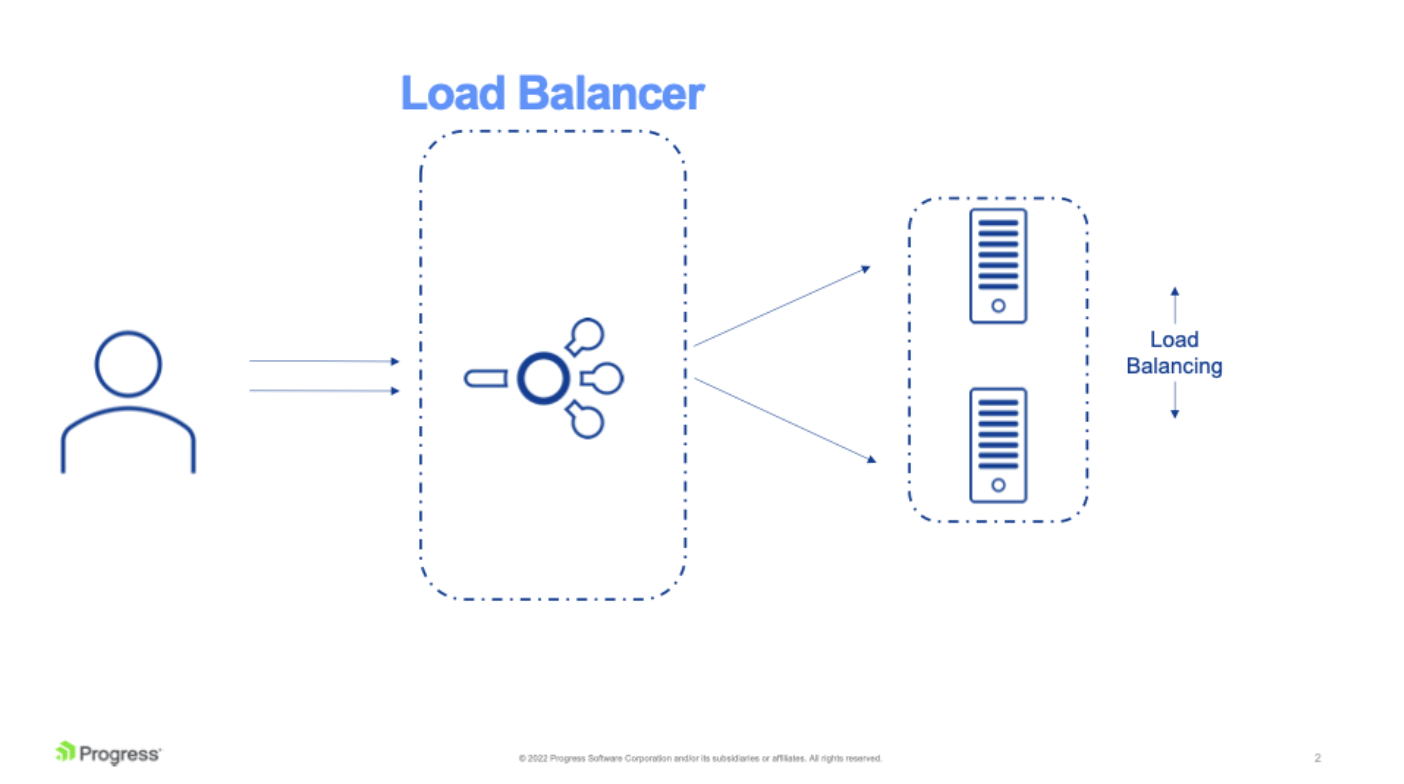

Load balancers have existed since the 1990s and have served the purpose of distributing traffic across multiple servers to enable redundancy of scaling of loads.

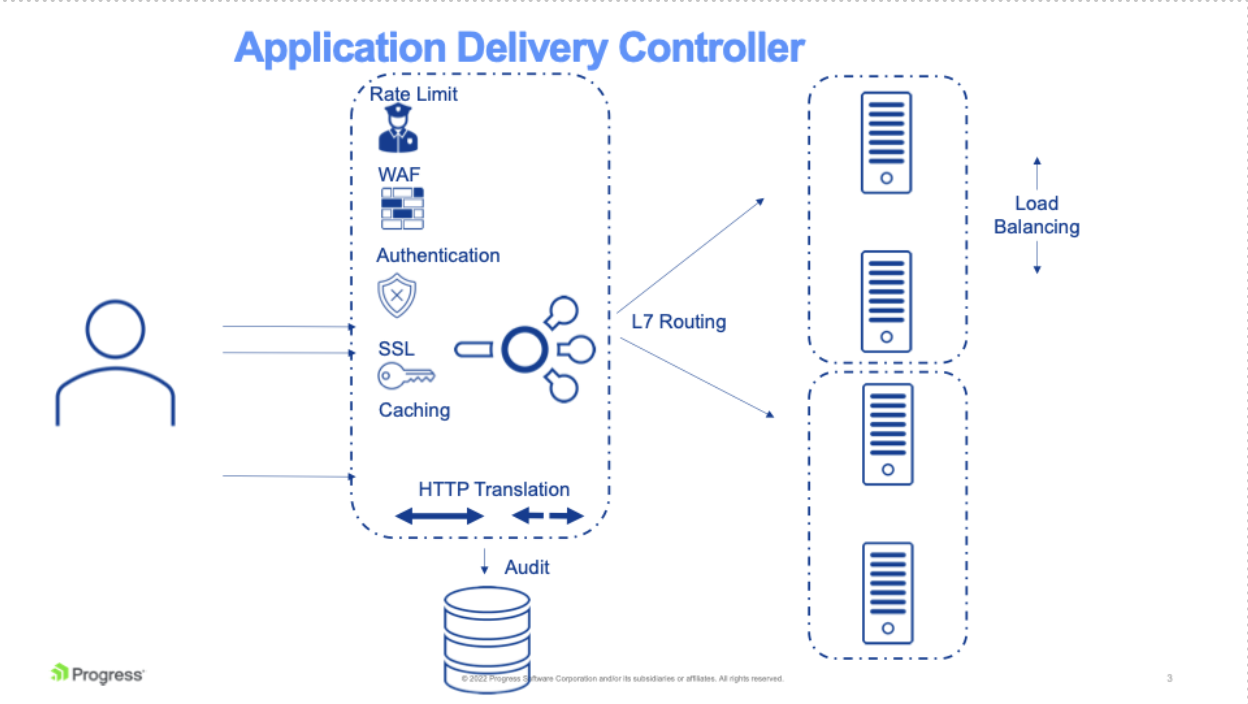

As networks evolved, the need for more functionality at the edge resulted in the evolution of the simple load balancer to the application delivery controller, which added advanced Layer 7 security features, such as filtering of HTTP requests, implementing security features and web optimizations such as SSL offloading and caching.

The reverse proxy referred to utilizing the advanced ADC functionality whether load balancing is needed or not. Traditionally, network operations teams have managed load balancers to ensure traffic loads are managed and application availability is maximized but decoupled from the actual application development.

What Does an API Gateway Do?

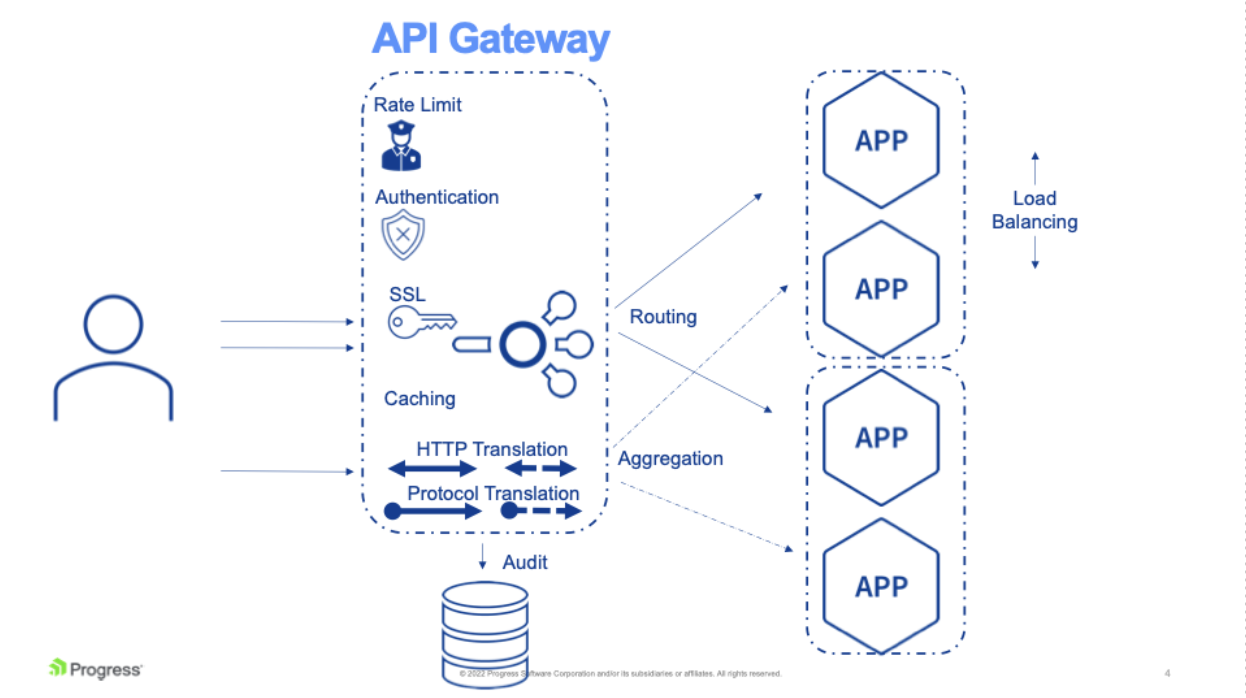

What does an API gateway do? An API gateway provides services to simplify the development of APIs. This functionality can span almost all of the functionality outlined for ADCs, including:

- Authentication of requests

- Filtering and routing of requests

- Termination of SSL

- Caching of data

- Rate limiting

- Logging and metrics

Some API gateways provide the ability to perform protocol translation, where an incoming request is translated from one format to another. For example, HTTP to GRPC. Another feature utilized in some cases is request aggregation. With request aggregation, a single request received by an API gateway will trigger multiple requests to different endpoints, returning a single response to the client.

Load Balancers and API Gateways: How do they function together?

Load balancers and API gateways, apart from one or two new concepts, overlap on many functionalities. A question that will be addressed by Network or IT Operators is whether a separate API gateway is needed or if the functionality can be consolidated with a traditional load balancer.

Answering this question will depend on two factors:

- Feature set

- Depending on the vendor and how the API is designed, it is quite likely most, if not all, functionality being utilized will be available. To determine this, a full audit should be completed on the current API gateway functionality required, and a comparison made to the functionality available from the existing load balancer.

- Organization structure

- For some organizations, it is desirable for the API gateway operations to be managed by Dev Ops teams separate to the Network Operations teams where the load balancer is managed. Dev Ops teams may require frequent updates to the API gateway configuration, and this may not be typical of how load balancer configuration changes are done. With load balancers now including support for configuration management, this may not be an issue.

Progress Kemp LoadMaster has the following features for enabling successful API delivery:

- SubVS’s for API Traffic Routing between services

- Content rules for HTTP request/response processing

- Rate Limiting Support

- Edge Security Pack for Pre-Authentication

- Web Application Firewall (WAF)

LoadMaster load balancer also has a RESTful API that can be used in conjunction with many scripting methods and applications to allow configuration automation.

LoadMaster is the premier choice for organizations requiring load balancing. With more than 100,000 deployments, LoadMaster offers the most capable solutions for load balancing, to ensure applications are always on.

Maurice McMullin

Maurice McMullin was a Principal Product Marketing Manager at Progress Kemp.

more from the author