Kemp Zero Trust Access Gateway Architecture Overview

In today’s infrastructure security landscape, zero trust means a lot of things to different people. When you think about it, this isn’t too much of a surprise given it’s not a single product or “stack.” In reality, zerotrustsare 3 things at their core.

First and foremost, it’s a strategy that dictates the posture that will be taken for attempts to gain access to application and network resources – regardless of source. Second, it’s an architecture and approach to how you arrange elements within your network, application, and identity environment to improve security posture via a “no trust by default” model. Third, it’s a context model for removing binary decision-making for granting application and service actions by taking into account the conditions of the request. The principles center on presuming that all entities attempting to gain access could pose a threat – whether internal or external. This leads to a verification first and provides limited access (with suspicion) to the second model.

If you were trying to protect a valuable physical asset, you wouldn’t lay out a red carpet from the entrance of the building that contains it straight to the secret door where it’s housed. Yet, this is the typical approach with published applications. The paths to where these services exist are clearly identifiable and, in some cases, advertised. Only after access is attempted is there some sort of validation as to whether the connecting entity has any business trying to get in or not.

A zero-trust approach aims to flip this model on its head by attempting the discovery of the intent of the connecting entity earlier in the flow and making a decision on whether further communication is valid or not before enabling closer access to the protected service.

Traditional Security Modeling

In a traditional network security model, a castle-and-moat approach was often leveraged where a strong perimeter was put in place. Everything outside was assumed to be dangerous until proven otherwise and everything inside the walls was assumed safe. This, in and of itself, was built on false logic given that vectors always existed that could be used by a bad actor.

As an example, if frontend web servers that responded to properly negotiated sessions existed in the DMZ and had some sort of daisy-chained access to other internal resources, this could be exploited. If the environment had email gateway servers that could either serve as a relay or at the minimum accepted email (some potentially malicious). This provided another door through the castle walls directly to users, their devices, and the access that they have to systems and data.

What’s more is that the logic of a strong perimeter has become further diluted since users, devices, and data often now live outside of the perimeter anyway. BYOD, WFH, mobile, and SaaS trends have ultimately created a new paradigm that’s here to stay. Cloud, mobile and IoT entities are often beyond the boundary of the firewall and as such, identity and access controls are required that provide consistency across both on-premises and cloud ecosystems.

Zero Trust – A New Paradigm

In light of all of this, it’s no wonder why alternative models are being evaluated. IT security has become more complex than ever and the exploits more sophisticated. In many environments, legacy infrastructure is located right alongside less secure IoT technologies. Zero-day threats and successful polymorphic attacks have skyrocketed in volume and more attacks than ever are identity-based in nature. This is where zero trusts shines – by assuming that any client could pose a threat, a significant emphasis is placed on context and signals to determine the outcome of access decisions. Just because a correct username and password are entered doesn’t mean that application access should always be granted and that’s the point. Network segment location, the exact nature of what is being attempted, and other factors matter and should be taken into consideration for a just-in-time and just-enough-access model based on strong policy.

While zero trust isn’t a net new concept, it has only recently gotten onto a track for mass adoption. The principles of the network access control (NAC) architectures of 2004 were followed by Forrester coining the term ‘Zero Trust’ and the first publishing of BeyondCorp in 2014. The Cloud Security Alliance software-defined perimeter working group has also done much to propel the concepts associated with zero trusts. Over the past year, the National Institute of Standards and Technology (NIST) has also published the SP 800-207 Zero Trust Architecture document designed to describe zero trusts for enterprise security architects and serve as a means to add the understanding of the approach.

All of these factors are expected to further increase the adoption of a zero-trust approach to application security for organizations.

How Kemp Helps Customers Address Zero Trust Objectives

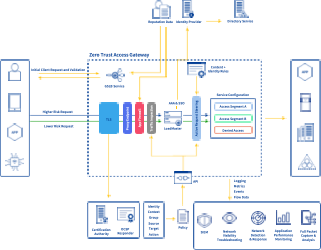

As a result of the overwhelming customer interest, we’re excited to launch our new zero trust access gateway architecture which enables customers to simplify the application of complex access policies to published applications and services. Our heritage as an application proxy advanced traffic steering and pre-authentication puts our advanced application load balancers in a prime position to contribute to a zero-trust strategy.

In much the same way as load balancers are often leveraged to consolidate certificate management, deep packet inspection, and application-level firewalling, the privileged position of ingress and egress is optimal for access policy enforcement as well. Our solution is comprised of native Layer 7 application publishing capabilities combined with IdP integration and complex client request distribution based on traffic characteristics. This means that various conditional inputs can be leveraged to decide whether or not access should be granted to load-balanced services along with control over what type of communications or actions will be allowed. Source network segment or whether the client is internal vs. external can be leveraged to determine whether additional factors of authentication will be provided. In the case of object storage proxying, controls over what S3 methods are allowed or not under what circumstances can also be dictated and enforced.

To simplify the construction, application, and maintenance of desired policies, an infrastructure-as-code framework based on the PowerShell wrapper of our RESTful API has been developed. This enables customers to leverage machine-readable definition files to provision their application security policies. Example policy configuration files have been created to streamline the process of getting started. The main driver for this approach is to help our customers reduce the cost of implementation, improve scalability by eliminating manual tasks and remove inconsistencies and misconfigurations – the number one cause of degraded application experience and outages.

Common Use Cases

In general, the philosophy outlined is often leveraged to help customers address well-established use cases such as application access for remote users, more fine-tuned access control for internal users, and general compliance with an organization’s infosec posture. Several traditional solutions exist that can address some of these, but they are not always suitable.

As an example, some organizations have relied exclusively on VPN-based access to address remote user scenarios. An increasing concern with this approach is the fact that without manually maintained highly granular access control rules on the network, lateral movement from unmanaged and untrusted devices is typically possible. In a BYOD scenario – which is becoming more popular, if a client’s device which IT has no control over can gain network access to the ecosystem, who’s to say that if compromised it can’t cause some level of damage. By instrumenting an approach that prevents remote devices from “roaming around” the ecosystem in the first place, an improved security posture is possible.

A variety of SaaS cloud-based solutions also has emerged for the primary purpose of enabling customers that are starting to adopt zero trusts. However, moving to these solutions typically requires a fundamental shift to how the environment is architected and accessed. While leveraging such a solution may be the destination, it often isn’t the first port of call. Rather, on the journey to zero trusts, it often makes sense to evaluate what’s already implemented how it can be arranged and deployed to start the organizational transition.

How to Get There

The first step to any such transition is to initially gain an understanding of the surface area to be protected followed by gaining an understanding of how applications, clients, and services are communicating today. This combined with an inventory of the exiting potential control points within the environment drives the architecture of a customized approach for your unique ecosystem. It’s at these points that customers often evaluate what they can selectively introduce to help accelerate the path to zero trusts such as cloud-based identity for unifying access models across a hybrid environment or additional layer 7 services that can have a positive impact.

Once this context is established, an approach to policy definition can be defined. The best way to get here is to look at your inventory of important applications and ask who should have access, what they should be able to do, from where this access should be granted, and what methods of access are OK for the various associated services. Once this is documented, you can now identify what inputs or signals can be used to make those decisions.

Intelligent load balancers combined with other parts of your ecosystem, such as your identity provider, can then be configured to use these inputs in the decision-making process. The last step ultimately is to have a model in place for iterative and ongoing improvement. This is only possible with a strong monitoring framework which enables you to know if the intended outcomes you had are actually being met or if unintended negative outcomes are being realized, such as parts of your ecosystem that should have access to a protected application now being blocked.

Beyond that, the right type of monitoring is important because there is no solution – other than not being in a network – that can prevent all possible threats. As a result, having systems that can identify the seemingly loosely coupled indicators leading to access breaches and help you create a clear picture from a forensics perspective is also critical to prevention and shortened time to detection/resolution.

The core key to success is starting with a focus on the business outcomes that your organization has. This then drives the specific approach that you’ll take to evaluating options and starting on the zero-trust journey. However, the current IT security landscape absolutely demands a different approach to the models that were previously leveraged. Start by looking at what you already have in place and how it can be augmented to enable you to start bringing in some of the foundational principles of a zero-trust strategy. Having the right model in place can help you to stay a step ahead of bad actors as well as maintain a competitive edge by preventing some security issues and being able to detect and respond to others more quickly.

Learn More

Learn more about what Kemp is doing in the zero trust space and evaluate our Zero Trust Access Gateway architecture by visiting https://kemptechnologies.com/solutions/zero-trust-access-gateway

Jason Dover

Jason Dover was Vice President of Product Strategy at Progress Kemp.

more from the author