How Kemp Uses Content Rules to Improve AX for Our K8S Applications

Introduction

As a DevOps engineer supporting multiple Kubernetes applications (developed by multiple different teams) it is important to have an ingress controller that can easily be configured for Layer 7 application routing and enforcing HTTP standards across a diverse ecosystem, Kemp LoadMaster takes the guess work out of application ingress and security concerns for published Kubernetes services. The integration of Kemp with Kubernetes with the ingress controller API has dramatically improved our workflow and the end user Application Experience [AX].

Today, we will demonstrate how our team uses Kemp LoadMaster load balancing solution to deliver services to external customers and internal organisational teams. I’ll keep a focus on the content rules engine (which is the core element in this setup), but it is important to note that the holistic solution we use encompasses many other features within LoadMaster. (see the Appendix for these topics)

The team I’m a part of has been publishing applications in the Microsoft Azure Kubernetes environment since 2017 and we have iterated over several ingress controllers/models. We have been eating our own dogfood and using this internally since early 2019. This has proven very successful and allowed us to directly address the challenges we face.

Application content switching

Brief overview of virtual services and sub-virtual services

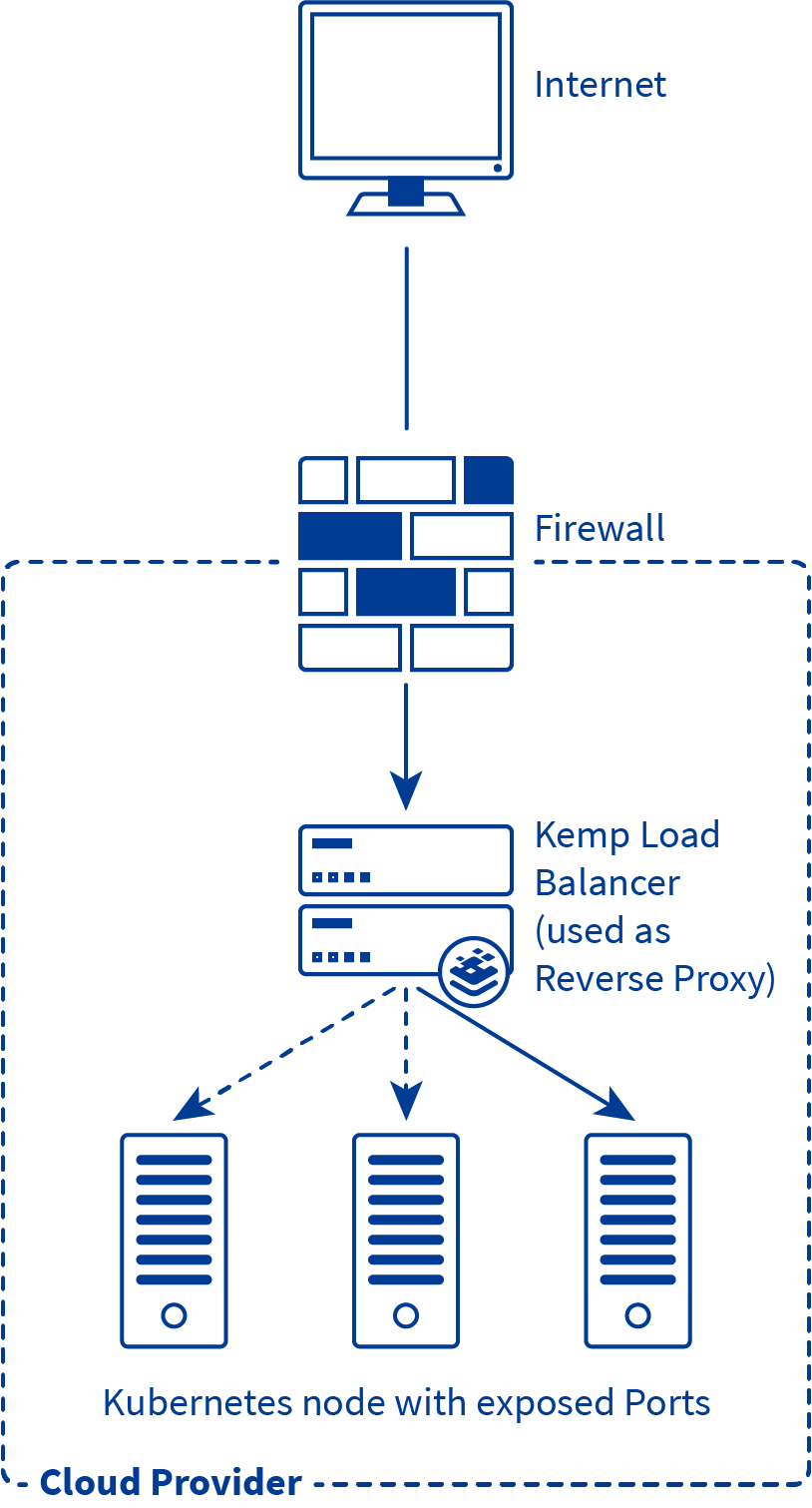

Using a virtual service is a common and proven method to provide high availability and control traffic distributions for applications. A virtual service serves as an abstraction of 1 or more real servers. To put this into context, we deploy a Kemp virtual LoadMaster in the cloud and configure a virtual service. This virtual service then will direct traffic to our Kubernetes nodes on configured exposed ports (real server sockets in LoadMaster lingo) for published services.

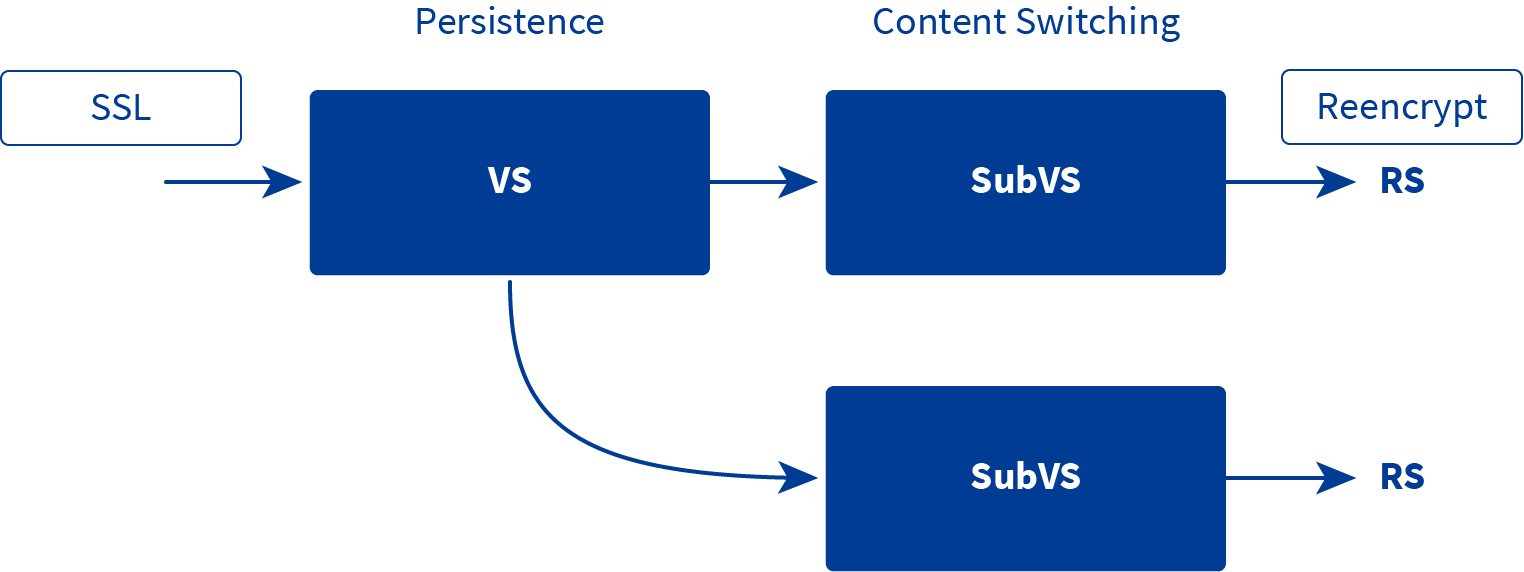

On Kemp LoadMaster the concept of virtual services is taken a step further with a virtual service being allowed to have sub-virtual services. This is especially useful in scenarios where you want to publish multiple services/sites/applications/micro-services under a single IP address and/or to differentiate service configuration of sub-components of a service. (i.e. micro-service architecture)

Content matching/switching

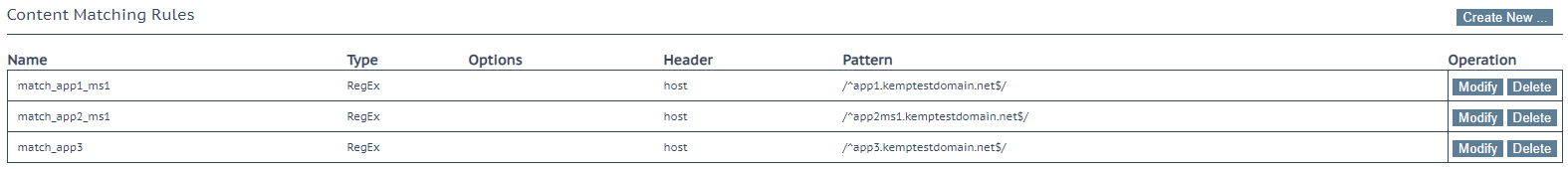

Kemp LoadMaster simplifies traffic steering and modification by using content rules which are based on regular expression syntax. The content rules engine can add/remove/edit headers, rewrite URLs, rewrite HTTP payload bodies, and content matching based off headers and/or URIs. Content matching rules can be applied to sub-virtual services and ‘real servers’ based off characteristics of the requests. This blog just scratches the surface of the feature set, for more on the content rules engine see the primary feature doc.

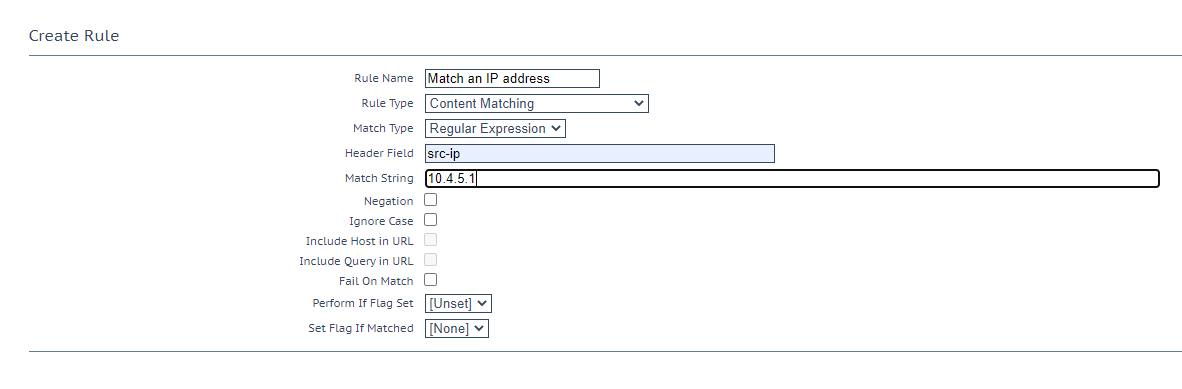

In our environment we primarily use this content matching against the HTTP host header of the sites, we differentiate the publish services/micro-services by matching on the sub-domain section of the FQDN. Our other use case is matching on the L3 source IP address in the packet header (src-ip) to only allow permitted certain IP address(es) to access select services/micro-services.

Application routing

The team I’m a part of has two large Kubernetes applications and several smaller applications which we manage the Kemp production environments for. Using a virtual service with sub-virtual services to represent separate applications and application micro-services in combination with content matching, we can configure routing to exposed Kubernetes sockets with ease.

Example (based on live environment)

In the above example there are 3 top level virtual services: 2 are for legacy application support (virtual machine backend) and the third one is serving applications being published on Kubernetes.

The above shows typical hostname content matching rules which would make sense for this application.

Here, we show how to configure source IP matching which allows you to restrict/allow access to services/micro-services dependant or independent of other rules. For instance, we could restrict an administration section of an application to be only accessible via a single source IP address.

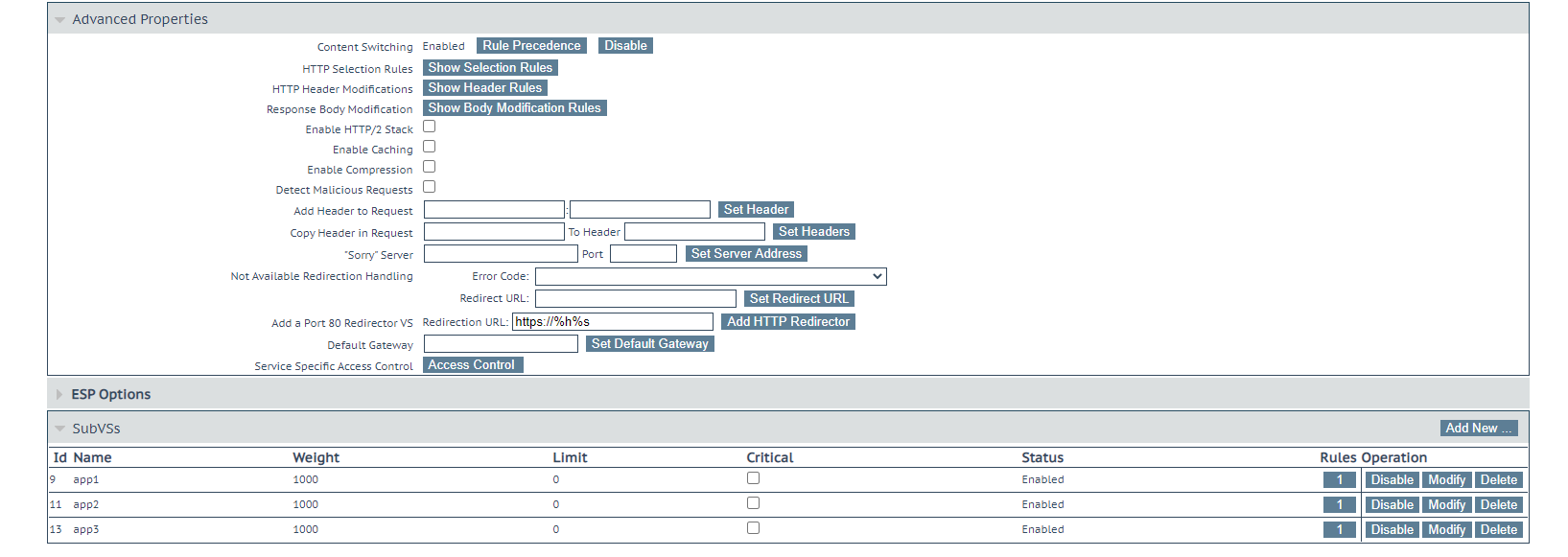

The content switching option needs to be enabled in the advanced properties section of the configuration and rules should be applied to each relevant SubVS at the bottom. In some scenarios you might need to adjust the rule precedence (order in which the rules are processed against incoming traffic

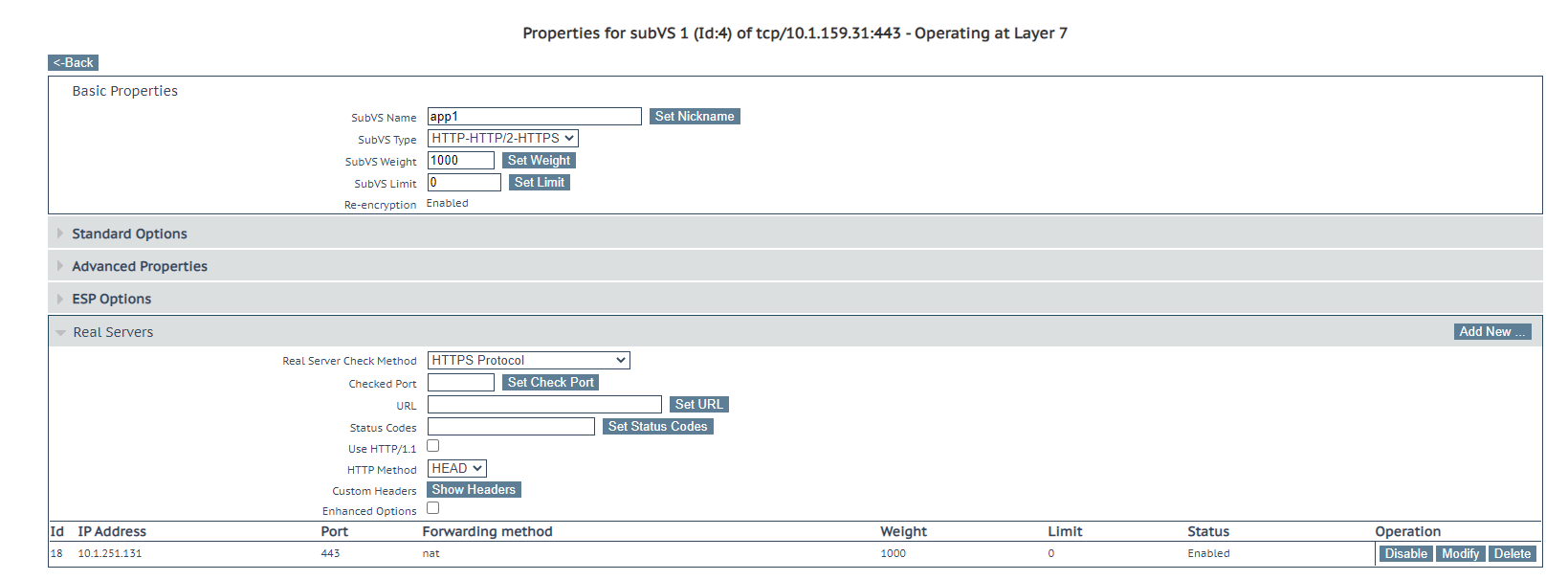

The virtual service configuration, by itself, does not route to anything without real servers defined. The final step is to add the exposed Kubernetes node service port(s) to the Sub-Virtual Service.

For a more detail explanation on configuration of hostname content switching see this doc. For a more detail explanation on configuration of source IP address content switch see this doc.

Service mode module for LoadMaster

At Kemp we have developed an API module for the LoadMaster which allows for automated dynamic linking of virtual services to Kubernetes service pods. The load balancer is configured to talk to Kubernetes using certificate based authentication to communicate with the K8s API server. Tags are added to services which you have created virtual services for and it becomes zero touch maintenance of services as pods get linked/unlinked automatically when they are created/destroyed.

Using content rules to enhance security in applications

Because it is possible to use content rules to enhance security in the application layer, my team has found it especially useful to have some of the applications being published where we would only have a very small Dev profile or would not contribute directly to the application security discussion.

Over the years we have had our sites have been audited and pen tested multiple times. I’ll cover the most common security header injection/modifications which we have applied to the applications. It is possible to apply policies for any of the HTTP security headers. There are plenty of fantastic free auditing tools out there but for HTTP security specifically I would recommend Mozilla observatory. (here)

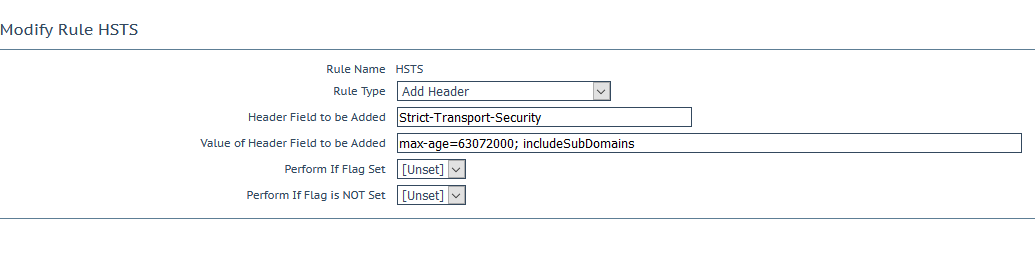

HSTS header injection

The HTTP Strict-Transport-Security (HSTS) response header informs browsers/applications that this site should only be accessed over HTTPS, this will prevent client accessing the site insecurely due to server misconfiguration or client app/browser issues. (or because someone released a Development instance by accident….)

X-Content-Type-Options

This header injection rule instructs the client and intermediate devices that the Content-Type header should not be changed and to be followed. This can useful to request client-side apps/browsers to handle payload data a certain way to enforce an expected behaviour.

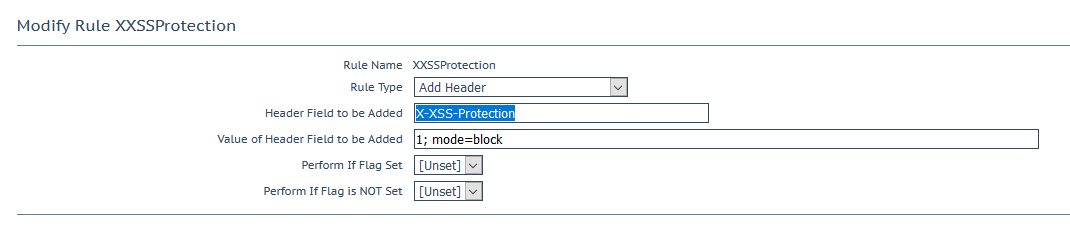

X-XSS-Protection

Header injection the instructs browsers to stop loading pages when they detect cross-site scripting (XSS) attacks. This is deprecated on newer versions of browsers in favour a Content-Security-Policy header but has its place for supporting legacy systems.

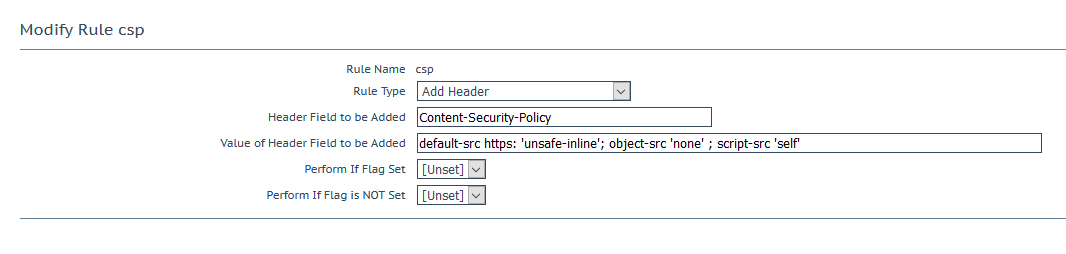

Content-Security-Policy

The Content-Security-Policy (CSP) is the current method used to protect against multiple different attack vectors, primarily XSS related. In our environment when we enabled CSP on the load balancer, this brought to light several security concerns around workflows within an application. While the developers were not too pleased at the time, it lead to meaningful process change in the long run. For further reading on CSP see here and/or here.

Summary

Our internal development environment at Kemp has benefited from the use of advanced application switching in our Kubernetes environment. The integration of a Kubernetes ingress controller API with the easy to use and robust content policy rulesets has made our workflow smoother and more secure. I hope this write up is of value to your DevOps situation. The Kemp LoadMaster solution has solved many problems for our customers and within the Kemp development teams.

Appendix

- SSL offloading, certificate and cipher management – https://docs.progress.com/bundle/loadmaster-feature-description-ssl-accelerated-services-ga/page/Introduction.html

- WAF - https://docs.progress.com/bundle/loadmaster-feature-description-web-application-firewall-ga/page/Introduction.html

Philippe Mahu

Philippe is a DevOps engineer at Kemp who works on CI/CD processes for both packaged and SaaS products. Philippe has been with Kemp for 6 years where he has worn many different hats with responsibilities ranging from Network support to Systems engineering to Software Development team lead, somewhat of a Jack of all trades with a passion for problem solving and learning about new technologies.

more from the author