What is Kubernetes?

Introduction

With the recent addition of the Kemp Ingress Controller for Kubernetes (available in LoadMaster), what better time to look at the role Kubernetes plays in delivering Microservice Applications. Kubernetes is an open-source platform for managing containerized applications at scale. Kubernetes grew out of an internal project to streamline containerized application management within Google and has seen rapid and widespread adoption. It is now one of the fastest growing open source projects in history. The name Kubernetes is derived from the Greek word for helmsman or pilot. Kubernetes provides guiding services to help navigate an ever-increasing proliferation of containers. It is pronounced “koo-ber-net-ees” and is often abbreviated to ‘k8s’ or ‘k-eights’ in conversations and text.

The Path to Containerization

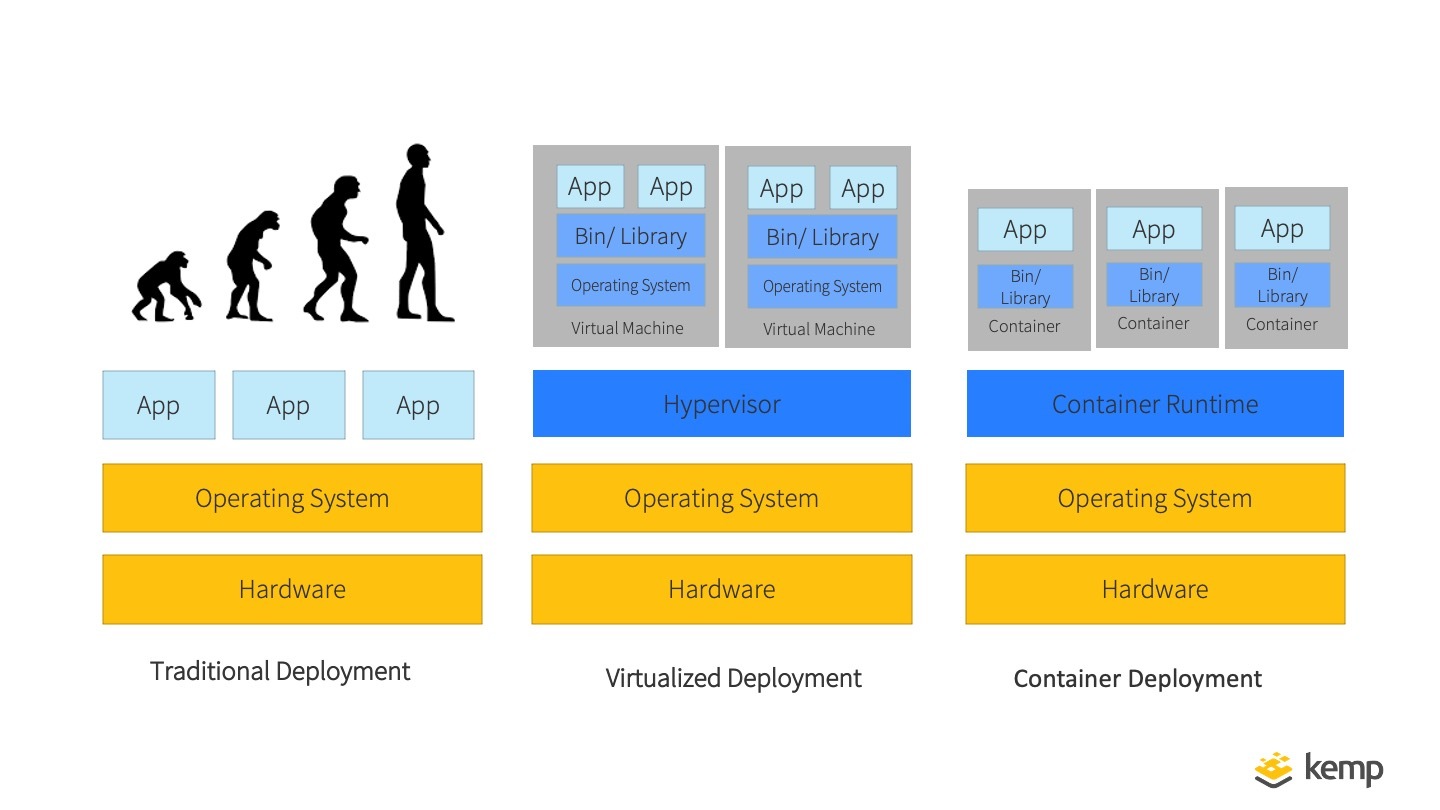

The role that Kubernetes performs and the need for it requires an understanding of containers in software deployment. Containers grew out of the virtualization deployment era of infrastructure management, which in turn was built upon what is commonly known as the traditional server deployment model. The following diagram shows the path from traditional deployment to virtualized deployment, and on to containerization.

Most people are familiar with the virtualization model where a hypervisor allows virtual machines (VMs) to be abstracted from the underlying hardware. Each virtual machine still has a separate copy of an operating system and other dependencies within the virtual machine independently from any other VMs.

Containerization takes the level of abstraction a step further. Containers are similar to VMs, but they don’t have their own copy of an operating system to carry. As such they are considered more lightweight than VMs. Containers do have their own file system, CPU allocation, memory, and process space. Multiple containers running on the same hardware are still independent like VMs are. They are also decoupled from the hardware as well as the underlying operating system. This means that the same container can be run unaltered on multiple target systems like Windows, Linux, Unix, macOS and various public cloud providers. This is very useful for development and deployment workflows. Developers can write code in a container on their PC or Mac, and the same container can then be deployed to any server-class operating system or to the cloud with no changes.

The ease of use and benefits of lightweight containers means that they tend to proliferate in number. Kubernetes provides the tools to tame and manage containers.

Microservices

Microservices describes an application design and development approach, that breaks an application into independent, loosely-coupled services that have clearly defined communication interfaces. This allows individual services to be scaled, upgraded or changed without impacting other microservices. It minimises code dependencies, provides more flexibility in technology and supports increased reusability. While microservices do not specifically require any particular deployment model (see above), the granular independent nature of microservices make this approach very suitable for containerization.

What Does Kubernetes Do?

Some organizations particularly those adopting a microservice approach to application design, can have hundreds, or even thousands of containers deployed from development through to production. These containers are often spread across multiple cloud services and also private data centers and server farms. Operations teams who need to keep these applications running and available need tools to manage these large numbers of containers efficiently. This is known as Container Orchestration, and this is what Kubernetes is designed to deliver.

Operations teams, developers, and DevOps teams continue to adopt Kubernetes due to its comprehensive functionality, the large and increasing range of tools that are available to supplement it, and its adoption across the leading cloud service providers. Many cloud providers offer fully managed Kubernetes services.

Kubernetes provides the following services to help orchestrate containers:

- Rollouts describe the target container landscape that is needed for an application and let Kubernetes handle the process to get to that state. This includes new deployments, changing existing deployed containers, and also for rollbacks to remove deployments that are no longer needed.

- Service discovery automatically exposes a container to the broader network, or other containers, using a DNS name or an IP Address.

- Storage orchestration enables storage from the cloud or local resources to be mounted as needed and for as long as it is required.

- Load Balancing within a Cluster manages the load across multiple containers delivering the same application to ensure consistent performance.

- Self-healing is achieved by monitoring containers for issues and restarting them automatically if required.

- Secret and configuration management enables the storage and management of sensitive information such as passwords, OAuth tokens, and SSH keys securely. Deploying and updating these secrets and application configurations that use them without having to rebuild the containers is possible all without exposing the secrets on the network.

Kubernetes Architecture

Kubernetes deployments are built using the following logical and physical components:

- Clusters – the core building blocks of the Kubernetes architecture. Clusters comprise nodes (see below). Each cluster has multiple worker nodes that deploy, run, and manage containers, as well as at least one master node that controls and monitors the worker nodes.

- Nodes – a single compute host that can be a physical machine, a virtual machine, or a cloud instance. Nodes act as workers or masters and have to exist within a cluster of nodes (see above). Worker nodes host and run the containers that are deployed, and a master node in each cluster manages the worker nodes in the same cluster. Each worker node runs an agent called a Kubelet that the master node uses to monitor and manage it.

- Pods – groups of containers that share compute resources and a network. Typically containers that are tightly coupled will exist in a single pod. Kubernetes scales resources at the Pod level. If additional capacity is needed to deliver an application running in containers in a Pod, then the whole Pod will be replicated to increase capacity.

- Deployments – controls the creation of a containerized application and keeps it running by monitoring its state in real-time. The deployment specifies how many replicas of a Pod should be run on a cluster. If a pod fails, the deployment will recreate it.

Summary

Now we have introduced the basic concepts of kubernetes, Next week we will examine the impact Kubernetes has on Network administrators and the challenges microservice based applications bring.

If you are currently looking at utilising Kubernetes and containerised microservices, make sure to check out the Kemp Ingress Controller for Kubernetes here…

The Ingress Controller Series

Check out our other blogs in the series:

- WHAT IS KUBERNETES?

- IMPACT OF KUBERNETES ON NETWORK ADMINISTRATORS

- EXPOSING KUBERNETES SERVICES

- THE KEMP INGRESS CONTROLLER EXPLAINED

- A HYBRID APPROACH TO MICROSERVICES WITH KEMP

- MANAGING THE RIGHT LEVEL OF ACCESS BETWEEN NETOPS AND DEV FOR MICROSERVICE PUBLISHING

Upcoming Webinar

For more information, check out our upcoming webinar: Integrating Kemp load balancing with Kubernetes.