Introduction

In our previous blogs, we introduced Kubernetes, explained the challenges it brings for Network administrators, particularly exposing Kubernetes services for access. We also looked in detail at the Ingress Controller and its functionality in controlling inbound traffic. An often ignored topic is how to manage access to Kubernetes applications alongside monolithic applications. For monolithic applications, ensuring availability and redundancy is typically achieved by utilising a Load Balancer to route requests to servers. For Microservices similar functionality is provided by the Ingress Controller. Let’s see how we can manage these side-by-side.

Microservices/Monolithic – Can I have both?

While much of the current content pertaining to Kubernetes and microservices is based on a 100% microservice architecture. The reality is that many organisations may choose to support both microservice and monolithic architectures side-by-side and this may be the case for some time.

This hybrid approach could be out of necessity for supporting existing applications. It could also be a deliberate choice, enabling the benefits of both to be realised depending on the application’s properties and needs. Support for both may even be part of a transition path to full microservice architecture. Thankfully, managing access to monolithic alongside microservice applications is possible without overly complicating network ope. rations.

Ingress and Load Balancing

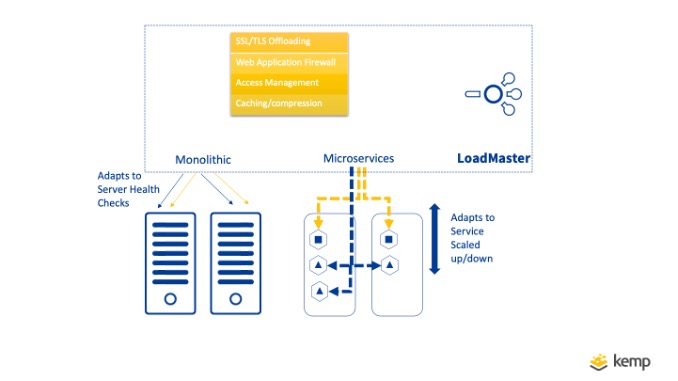

Network Operations teams typically use Load Balancers to provide resilient application delivery. As we discussed previously, Ingress Controller and Load Balancer share many properties. Both route inbound client requests to multiple application endpoints. Both use scheduling methods to define how traffic is distributed. Both can ensure client requests ‘persist’ to the same endpoint where possible.

With a Load Balancer, a Virtual Service (or Virtual Server) is configured with an IP address/port for incoming requests and “Real Servers” to distribute traffic. Health checks ensure unavailable servers are taken out of rotation without users suffering application downtime. Sub Virtual Services are also used to segregate traffic by hostname and/or path.

With Kubernetes, applications are made up of microservices. Service Type defines how these can be accessed and an Ingress Resource may be used to define the external IP address/port to use and rules for how specific hostname and/or paths are routed to services. If a pod becomes unavailable, Kubernetes will take it out of service and re-create. Based on scaling rules the number of pods may increase or decrease. The Ingress Controller automatically adapts to these changes ensuring requests are always sent to existing pods maintaining application availability.

LoadMaster Ingress Controller and Load Balancer

The release of Kemp Ingress Controller means LoadMaster can operate as both an Ingress Controller for Kubernetes, as well as a Load Balancer for monolithic applications. By supporting both sets of the functionality on a single device, management can be simplified. This enables advanced functionality such as Web application Firewall, Edge Security, SSL Offloading, and Caching to be utilised uniformly.

Let’s take a look at two ways of configuring microservices and monolithic applications together on LoadMaster.

Side by Side Applications

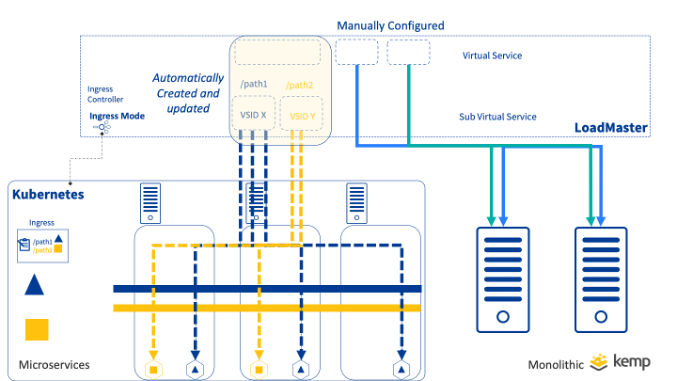

Where some applications are microservice-based and others monolithic, a simple approach is to run both application types side-by-side using separate virtual services. To achieve this, virtual services for monolithic applications are created manually. The Ingress Resource in Kubernetes is defined which results in additional virtual service(s) to be added with the required traffic rules and options included, insuring the Ingress functionality is realised.

Here is an example Ingress Resource definition in Kubernetes.

# kemp-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kemp-ingress

annotations:

"kubernetes.io/ingress.class": "kempLB"

"kemp.ax/vsip": "10.151.0.234"

"kemp.ax/vsport": "80"

"kemp.ax/vsprot": "tcp"

"kemp.ax/vsname": "InboundKubernetesVs"

spec:

rules:

- host: review.kemp.ax

http:

paths:

- path: /reviewinput

backend:

serviceName: review-front

servicePort: 80

- host: cart.kemp.ax

http:

paths:

- path: /checkout

backend:

serviceName: checkout-front

servicePort: 80In this example, The Kemp Ingress Controller will automatically create a Virtual Service on 10.151.0.234:80 named InboundKubernetesVs. This will then split traffic as defined (review.kemp.ax/reviewinput and cart.kemp.ax/checkout). This traffic will be sent to existing pods of the review-front and checkout-front services

In this scenario, the Ingress Controller virtual services are created and updated automatically and the other virtual services operate as per configuration.

Split Application

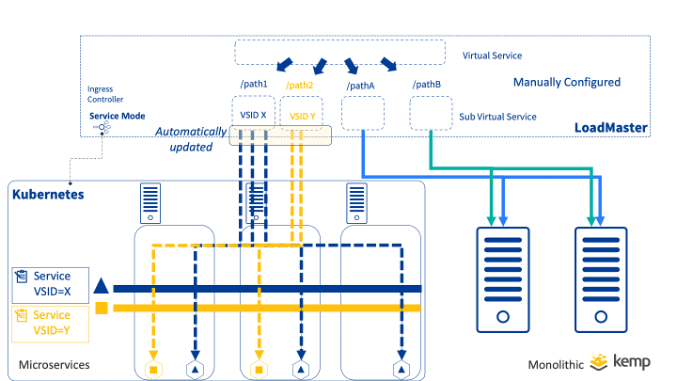

Where an application may have components serviced by containers and monolithic servers, a useful option is to split the application. This can be done by utilising a single virtual service that splits into both monolithic and microservice components. With this model path and host, rules are manually configured on the LoadMaster taking this aspect of configuration away from Kubernetes.

Kemp Ingress Controller ‘Service Mode’ enables this by allowing Kubernetes Services to be mapped to specific Virtual Services enabling a controlled approach. It doesn’t require an Ingress Resource to be used and instead utilises annotations in the service definition.

# kemp-service.yaml

apiVersion: v1

kind: Service

metadata:

name: path1microservice

labels:

kempLB: Enabled

annotations:

"vsid": "6"

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: path1

-----------------In this example, the service is annotated with kempLB: Enabled to instruct the LoadMaster that this service requires attention, and the “vsid”: “6” annotation instructs the loadmaster to direct requests from the Virtual Service with Identifier 6 to pods of this service. This results in Real Servers being dynamically created and based on changes to the Service. In this example, Virtual Service ID 6 is a SubVS that is already manually configured for a specific subset of traffic on the Virtual Service.

This approach offers massive benefits in flexibility and control for inbound traffic enabling seamless migration from monolithic to microservice application architecture.

Summary

We have described two approaches for managing microservice and monolithic applications in a hybrid manner showing the flexibility that can be realised by using a single Load Balancer/Ingress Controller

To take full advantage of Kemp Ingress Controller functionality see here…

The Ingress Controller Series

- WHAT IS KUBERNETES?

- IMPACT OF KUBERNETES ON NETWORK ADMINISTRATORS

- EXPOSING KUBERNETES SERVICES

- THE KEMP INGRESS CONTROLLER EXPLAINED

- A HYBRID APPROACH TO MICROSERVICES WITH KEMP

- MANAGING THE RIGHT LEVEL OF ACCESS BETWEEN NETOPS AND DEV FOR MICROSERVICE PUBLISHING

Upcoming Webinar

For more information, check out our upcoming webinar: Integrating Kemp load balancing with Kubernetes.