Introduction

Following on from last weeks Kuberntes introduction, this week we look at the impact of Kubernetes on network Administrators. Lately, there is massive interest in the move to microservice applications. A recent O’Reilly report shows that more than 60% of the respondents have been using microservices for a year or more and almost one-third (29%) say their employers are migrating or implementing a majority of their systems using microservices. Though not strictly necessary for microservices, containerised architectures are an excellent fit for microservices and in most cases these use Kubernetes as an orchestration tool for management.

The benefits for the shift have been well articulated. Microservices help developers break applications into easier managed components, minimise code dependencies, provide more flexibility in technology and support increase reusability. From a dev-ops perspective this allows for faster and easier deployment of new features, breaks down barriers to updates and enables easy scaling of applications.

But what about network administrators? Delivering Applications via microservices not only changes how the applications are developed and released but also impacts how end user traffic reaches the application – something of interest to any Network Administrator.

Important Considerations for Network Admins.

In any organisation, the successful role out of microservices will require buy in from all stakeholders, not least those responsible for network availability and application reachability. Therefore a successful transition will depend on changes to these aspects of the application to be fully understood. To understand this, we need to compare the differences in application design.

Application Comparison

In the traditional (monolithic) Infrastructure, applications run on distinct servers (Virtual, Cloud or Hardware) and application end points are typically the servers themselves. (Or at least delivered via a unique port number on a server). As a result of this, the number of application end points is relatively constant. Upgrades and changes to these are not too frequent and come with some administrative cost such as maintenance windows and/or loss of redundancy. Additional server end points are added (perhaps as demand scales) though isn’t a frequent occurrence. On top of this since applications are self-contained most traffic handled is from clients to the application (commonly referred to as North-South traffic) and there is less application to application traffic (East-West traffic) across the network.

In the case of containerised microservices, these properties change. Rather than being coupled with Application Servers, Applications run in containers inside Kubernetes Pods, hosted on a Cluster of two or more Nodes and there is little dependency between the number of nodes to number of Pods. The number of Containers used for an application can change regularly and auto-scaling enables this to increase or decrease dynamically as a result of demand. Changes to applications are much less cumbersome and new application containers can be re-created and destroyed on demand or dynamically when required. Also, since applications are made up of many microservices, a large volume of traffic is sent between microservices potentially across different nodes in the Cluster (East-West Traffic).

Application Delivery Challenges

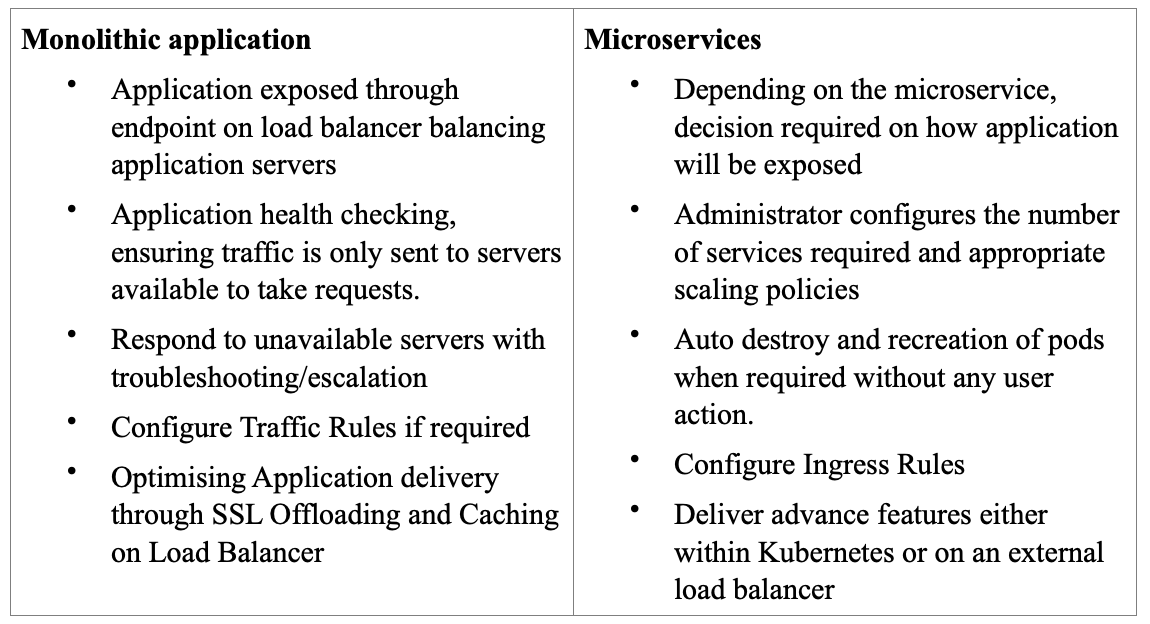

The changes outlined bring their own challenges to the job involved in ensuring applications can be accessed all the time by all users. For the traditional monolithic application it is typical for a network administrator to be responsible for exposing an application through an end point typically configured on a Load Balancer for application redundancy. Along with this, intelligent health checking is done to ensure traffic is only sent to servers available to take requests. When servers are unavailable, redundancy ensures application availability is maintained (at least with overall loss of capacity) and troubleshooting/escalation is triggered to ensure overall availability is maintained. Applications can be optimized through SSL Offloading and Caching typically performed by a Load Balancer/Application Delivery Controller. Finally content rules/policies may be configured so certain traffic is sent to a specific server typically based on path, hostname or device.

With Kubernetes, application availability starts with deciding how a service is made available to external clients through a Kubernetes Service configuration. For this there are a number of service types to choose from. Some Services may not need to be accessible from external clients such as backend code and other may need to be accessible such as a front end web page. Rules used to steer traffic as it enters the Kubernetes Cluster may also be defined commonly referred to as Ingress policy. Advanced features such as Caching and SSL offloading may then be configured either inside or outside of Kubernetes and since the application is now made up of a number of separate microservices consideration must be made for traffic between services commonly referred to as East-West traffic.

While the challenges of monolithic services and microservices differ there is no need to be daunted. With Kemp Ingress Controller, both Monolithic and Microservice applications can be managed side by side using a common interface.

Summary

It is clear the move to Kubernetes not only impacts development teams but also any role responsible for application and network availability. Of all the changes perhaps the biggest impact can be seen in how an application is exposed by a Kubernetes service. In the next section we look at the different service options here.

To take full advantage of Kemp Ingress Controller functionality see here…

The Ingress Controller Series

- WHAT IS KUBERNETES?

- IMPACT OF KUBERNETES ON NETWORK ADMINISTRATORS

- EXPOSING KUBERNETES SERVICES

- THE KEMP INGRESS CONTROLLER EXPLAINED

- A HYBRID APPROACH TO MICROSERVICES WITH KEMP

- MANAGING THE RIGHT LEVEL OF ACCESS BETWEEN NETOPS AND DEV FOR MICROSERVICE PUBLISHING

Upcoming Webinar

For more information, check out our upcoming webinar: Integrating Kemp load balancing with Kubernetes.